¶ FAQ

Table of Content

- What is Orchestra?

- What exactly do you mean by Knowledge Orchestration?

- What is so special about orchestra?

- What is a "digital twin" (or "logical twin", "virtual twin")?

- What does it mean to "overlay" Orchestra on my information?

- Modeling my business requires I create entities - What's an entity?

- You use the word "instrument" a lot - What are Instruments?

- How does Orchestra help me protect and control the sharing/use of my information?

- What do you mean by Knowledge Graph?

- What is an adapter?

- What is a locutor?

- What is a waiter?

- What is an information adapter?

- What is an analytic adapter?

- What are the classes and categories?

- What is the taxonomy?

- What are facts, facets and feautures?

- What is Knowledge Density?

¶ What is Orchestra?

Orchestra is a software platform purpose built to help organizations improve their data management and analytics. It is built over a peer-to-peer, secure, data sharing technology called AvesTerra.

¶ What exactly do you mean by Knowledge Orchestration?

By Knowledge Orchestration, we refer to transforming a set of data sources (databases, files, data streams...) and the domain knowledge associated with it, into a knowledge graph. This implies working closely with our clients to make sure that we understand the information they want to be able to capture from their data, and the way that we can keep this data up-to-date to best serve their decision-making requirements.

The most unique and powerful aspect of Orchestra is its ability to integrate a large number of diverse systems into a single seamless distributed knowledge space (i.e. knowledge orchestration) at scale. That is an immensely powerful thing, and while we can use the Orchestra platform to build conventional query-response type applications, that barely leverages, nor highlights its unique strengths.

With an integrated knowledge space, an Orchestra analytic developer can actually write analytics that span countless data systems without ever having to deal with the otherwise absolutely massive amount of plumbing they would have to contend with if the information was spread out. Sure, somebody might say build a data federation system or data lake (swamp) instead, but that is fraught with massive fundamental technical peril, especially when the data systems are distributed and constantly being updated and changed.

Once you have truly integrated into a single seamless knowledge space all relevant knowledge, independent of platform, location, etc., then we can template just about everything and all our analytics can be highly reusable across an enormous spectrum of applications and scale.

¶ What is so special about Orchestra?

Orchestra offers secure, real-time, decentralized, federated learning. The GAFAMs of the world can do federated learning, but they all need some form of centralization (ie, taking the data out of your company and into their own servers) to do so. Additionally, the peer-to-peer network that we rely on to solve this problem acts as a control system, and we can for this reason have an incredibly effective real-time management of all data and data-based operations. We:

provide a way to share data securely between various data sources, and manage data access policies with granular control;

integrate diverse data sources into a common, consistent, leverageable model;

enable organizations to create a digital twin of their operations, allowing them to monitor and experiment virtually with their operations in real-time;

have analytics run real-time over your data, bringing you daily reports so you can react as soon as possible to changes in your internal operations, rather than wait for multi-month long analyses that go nowhere because it has now become impossible to act;

provide a true control system so that your organization's infrastructure and its managers can react automatically, in a way you see fit, to any foreseen or unforeseen event.

¶ What is a "digital twin" (or "logical twin", "virtual twin")?

A digital twin is a full, comprehensive, data model of your organization. A digital twin is like a virtual mirror image, within a set of computers, of things that are happening in the real world. It is meant to be kept up-to-date in real time, be modelled according to your organization's domain knowledge, and be duplicated as desired in order to probe how the system would react to such-and-such event.

A digital twin is an incredibly powerful thing to have for an organization. Just having a "readable" version of this model, nothing more, is already an incredible amount of oversight provided into the management of a complex system, since diagnostics into the system's behavior can be run from a single computer terminal. Add analytic adapters to the mix, and you gain a way to run automated science over your organization, to glean useful information and metrics from your system's raw data. This can be crucial to reduce a system's informational complexity, make it digestible for human use, and showcase the important parts of the system in a way that truly aids decision-making by the organization's deciding parties. Finally, consider that copies of the current digital twin can be made for analytical purposes (without affecting the "true" digital twin), and the data of these clones can be changed arbitrarily. With such a scheme, you can use your digital twin(s) to understand how your system would react, or should react, to a complex event. This can be done by providing multiple alternative ways of dealing with a current unforeseen problem, propagating that within a given clone, and analyzing for a set of clones which solution/decision would best solve the problem.

¶ What does it mean to "overlay" Orchestra on my information?

An Orchestra server will act both as a gateway for your organization into a Knowledge Graph, and as a way to make access to your various data sources coherent. When setting up a Knowledge Graph, you can configure whether your data can be globally shared over the open Internet, shared with only specific organizations, or shared entirely internally to your company, or to a section of it. When choosing how to model your data within the Knowledge Graph, we can make it so that your various data sources (databases, filesystems, data streams, ...) are represented in a way that is consistent: this allows you to leverage the full capabilities (logistically, analytically...) of all the data within your organization.

¶ Modeling my business requires I create entities – what's an entity?

The fundamental thing Orchestra uses to model real world objects/concepts is an entity. As this is one of the core concept of Orchestra, please go visit this section to understand it more!

¶ You use the word "instrument" alot – what are Instruments?

Instruments refer, so to speak to "units of visualization or data management". Instruments are what you'd like to have on your dashboard or control panel; and it's with instrument that we compose the UX/UI for our apps. These include statistical graphs, gauges, indicators, bar charts, line charts, annotated flow charts, maps, buttons, levers, etc.

¶ How does Orchestra help me protect and control the sharing/use of my information?

Orchestra, the technology which enables the creation of Knowledge Graphs, was built from the ground up with the idea of secure data sharing in mind. Within the Knowledge Graph, there exist specific entities called compartments and identities, which roughly correspond to groups and users, respectively. Every network request both external and internal to the Knowledge Graph is signed with an authorization parameter, and an Authenticator Adapter makes sure that only identities granted access to a particular compartment can get access to the data associated with that compartment. Orchestra provides an application meant to make this compartment/identity management easy to handle for our clients. With Orchestra, you can decide on whichever access policy suits your needs, where any subset of your data can be made more or less accessible.

¶ What do you mean by Knowledge Graph?

A knowledge graph is way of organizing and analyzing data in a way that can produce interesting internal feedback loops. Whatever data is analyzed produces new data, which can be fed back into the knowledge graph. This combination of data and analytics that are constantly updating and improving themselves, while providing exporting mechanisms for useful information, is an optimal way of leveraging a multitude of diverse data sources into information that can be concretely applied to decision-making. A knowledge graph, put in another way, is an observable and interactable agent-based model of raw, curated or processed data entities.

¶ What is an adapter?

An adapter is a code component that calls the method adapt and that implements how certain entities respond to invoke calls.

To know more on the adapter, please visit this section

¶ What is a Locutor?

A Locutor is a data structure containing all the arguments of an HGTP invoke call. The term orignates from a word play between Locater and Locution. It is generally used to describe the location of some piece of knowledge in an entity.

For more informations on locutors, please visit this section

¶ What is a waiter?

Subscribers Waiters are agents that call the "WAIT" command on an outlet; if the outlet has subscribed to events on one or more entities, then such events will be routed to the WAITing process. This can be useful for being notified of changes in the Knowledge Graph, events occurring in the real/cyber world, and system coordination/synchronization.

Visit this section to know more about that mechanism.

¶ What is an information adapter? - To delete

An information adapter (sometimes called connection adapter or link adapter) is, like all adapters, a software client to the Knowledge Graph. Its specific purpose is to handle ingestion of data into the Knowledge graph, potentially in real-time. This means taking a data source (database, data stream, filesystem, ...) and automatically inserting its content, in the desired format, within the Knowledge Graph. This allows us to organize the Knowlege Graph in a consistent, configurable way, and keep it up-to-date with its data sources in real-time without having to do time-consuming changes to code or data.

¶ What is a knowledge adapter ? - To delete

A knowledge adapter (sometimes called visualization adapter) is, like all adapters, a software client to the Knowledge Graph. Its specific purpose is to format the data within the Knowledge Graph in a way that it can be interfaced into a visualization front-end, application, or dashboard. This allows us to use the Knowledge Graph's data in a way that can feed whatever front-end technology we need to best serve human UI/UX needs.

¶ What is an analytic adapter?

Often referred to simply as an "analytic", an analytic adapter is like all adapters a software client to the Knowledge Graph. Its specific purpose is to run some form of data analysis. Analytic adapters are the core "automated science" part of Orchestra. Some run standard statistics algorithms; some use machine learning; others enable effective agent-based modelling...

¶ Each entity has a category and class identified for it – what are they used for? - To delete?

Categories and classes in the knowledge space are fairly simple. To date, the category and class taxonomies have been built ad hoc, can be readily extended, and are identical. When an entity is created, it is helpful to assign a class (other than NULL_CLASS) to the entity so that anyone looking into the knowledge space will be able to tell what it means. Category is just a way of assigning, say, a superclass or some other higher level grouping to an entity, but we really haven’t done much with that.

Technically, the knowledge space can support multiple simultaneous ontologies, as each entity also has a context which can be used to identity which one. As present, the ontologies are implicit (i.e. in the designer’s head), but eventually they can be encoded/represented in the knowledge space itself. There’s not any particular need for that until there are tools that need to do automated inferencing. But for now, all the use cases have been heavily constrained so the meaning/use of the classes have been unambiguous.

¶ I'm trying to understand the significance of the Attributes in the Avial Taxonomy? How are they used by Orchestra?

Having a common lexicon is the reason for the attribute taxonomy. If these were to be string, then anything goes, and we’d quickly have knowledge space that would be a babbled mess.

Having a taxonomy allows the different languages to use an enumerated type, which compilers can syntax/semantic check. In general, we always extend a taxonomy, almost never refactor the taxonomy, as that would invalidate everything that is currently in the knowledge space.

¶ How do I know what to use between facets, features, facts?

We explore this in the section about Avial modeling

¶ What is Knowledge Density and how does it apply to the LEDR Knowledge Service?

Knowledge density (KD) is defined as the level of connectedness in the graph, in mathematical terms it is essentially KD = R/E… where E is the number of entities (E)and R is the number of references, or relationships between each entities (R). So if we have 10 entities and 10 references that is a KD of 1 … if I have 1 Trillion entities and 1 Trillion references that is also still a KD of 1.

Having a zillion entities (E = 1 zillion) but no computed relationships among them (R = 0) is essentially just a regular database. Doing relational joins generates R. Of course, relational joins are computationally expensive on the fly especially if you have to do lots of them repeatedly across many tables. So, taking a zillion entities and joining the daylights out of them and saving the results as you go along creates a graph. As such while E is important, R is also important. Given a set E, KD is important as it’s an indictor of how well E has been linked (i.e. R). Ideally, "good things should happen roughly when R is about 1000 times of E" as quoted by J, which means having KD of 1000 is the ideal level to achieve.

With all the servers linked into one knowledge space, this is very nearly the actual algorithm as it really doesn’t matter where around the planet the servers (entities) are located.

¶ Knowledge Density Growth Table example

| IOC | Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | Q7 | Q8 | Q.... |

|---|---|---|---|---|---|---|---|---|---|

| E | 5.40E+04 (54,000) | 5.40E+05 (540,000) | 5.40E+06 (5,400,000) | 5.40E+07 (54,000,000) | 5.40E+08 | 5.40E+09 | 5.40E+10 | 2.70E+11 | 1.35E+12 |

| R | 1.00E+06 (1,000,000) | 2.51E+07 (25,100,000) | 6.28E+08 | 1.57E+10 | 3.14E+11 | 6.28E+12 | 6.28E+13 | 3.14E+14 | 1.57E+15 |

| KD | 18.59 | 46.48 | 116.20 | 290.51 | 581.02 | 1162.04 | 1162.04 | 1162.04 | 1162.04 |

| Goal for KD | 15.00 | 50.00 | 100.00 | 250.00 | 500.00 | 1000.00 | 1000.00 | 1000.00 | 1000.00 |

| Increase E by | 10 | 10 | 10 | 10 | 10 | 10 | 5 | 5 | |

| Increase R by | 25 | 25 | 25 | 20 | 20 |

In this table, we see how E, R, KD will expectedly increase over time, as well as the goal for KD and increase of E and R to achieve during this period.

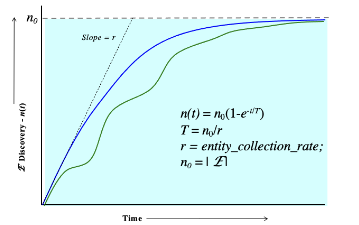

¶ Entity Discovery Gowth Chart

In this chart, we see how the number of entities should increase over time, where the total number of entities will eventually reach a plateau.